TDX

First, I’ll be at Salesforce TDX next week. For those of you who are attending, and haven’t been to San Francisco lately, I’ll just say that in my recent trips into the city I’ve barely seen any “urban decay”. Come to think of it, I’ve barely seen any people, not compared to the hustle and bustle of years (and decades) ago. Mostly just happy tourists...

I’m looking forward to seeing what Salesforce has been up to recently with AI.

Models

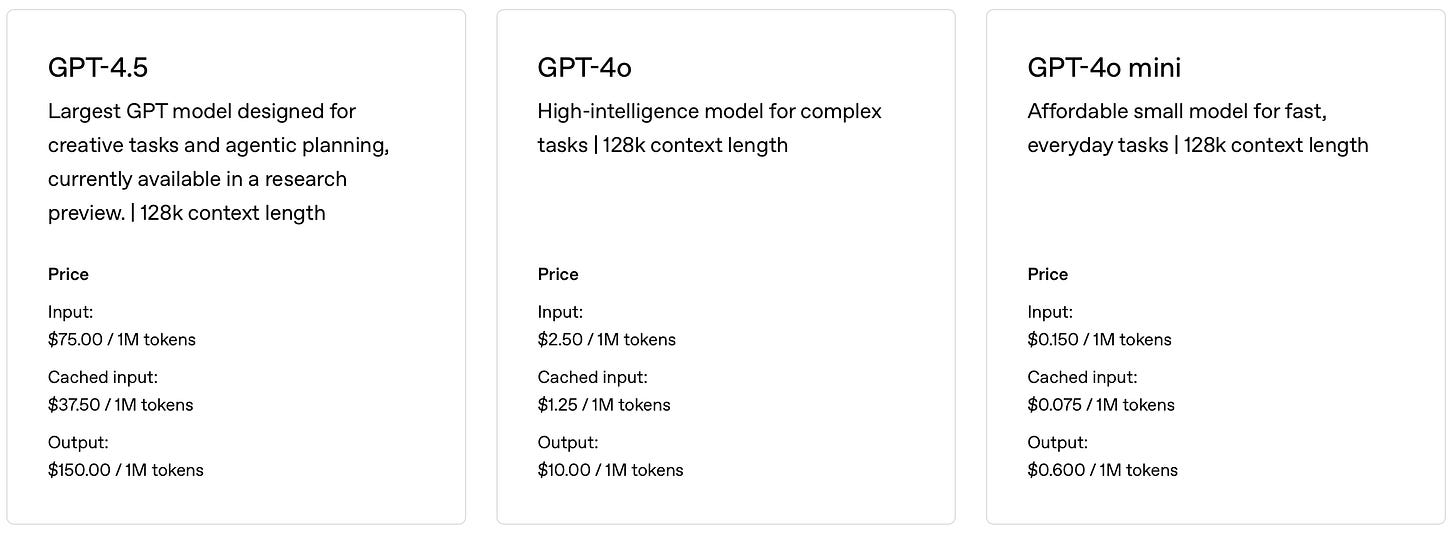

As foreshadowed, OpenAI has released its new GPT-4.5. Initially it’s available to people with the $200/month ChatGPT Pro subscription, or available via the API. I first thought I’d start moving some tasks from 4o to 4.5, assuming that the pricing would be about the same. Let me double check first:

Holy shit, that’s expensive.

GPT-4.5 is 30× more expensive for input and 15× more expensive for output than GPT-4o. I’m having a real hard time with the “add 0.5 to the version” strategy here. And OpenAI has said that in the next month or two they’re going to release GPT-5. I just have to ask, how expensive is that going to be? Is it going to be GPT-Birken expensive?

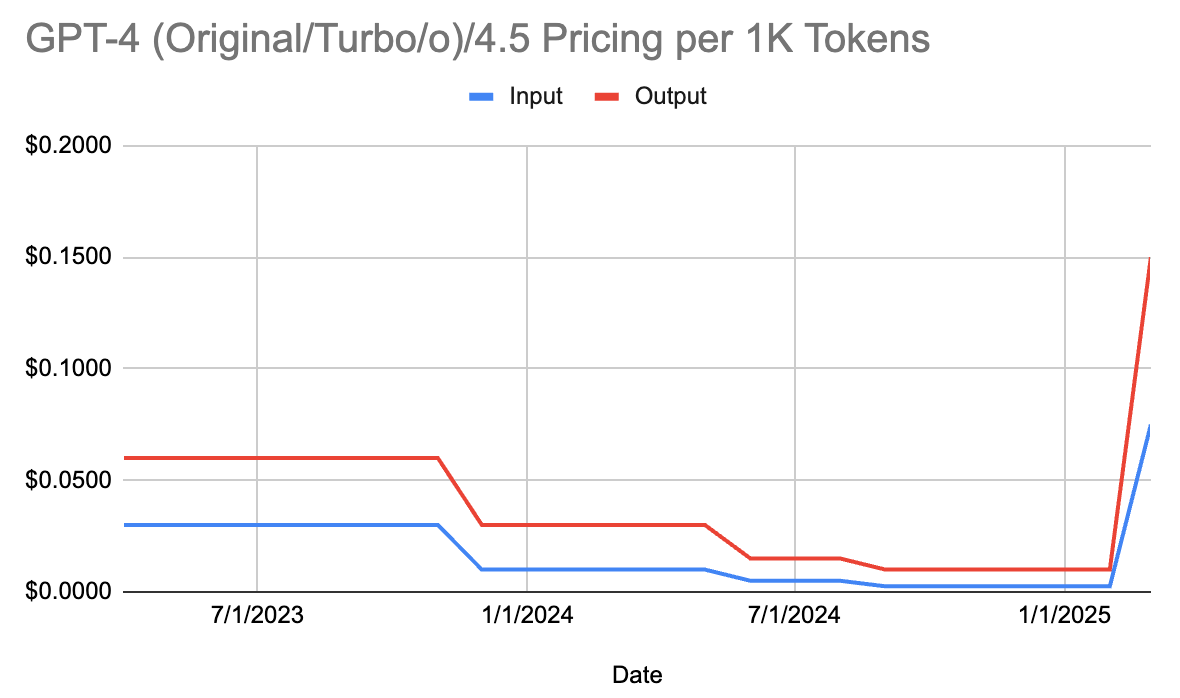

If you were to view 4.5 as a continuation of the 4 series, then this is how pricing has progressed over the past two years:

Clearly it’s not the continuation of the 4 series if it’s 15 to 30 times “better”. I don’t know what OpenAI’s strategy is here, they’ve taken a page from Anthropic’s Claude 3 Opus titled “oddly high priced model.” In interviews, OpenAI folks explain that it’s just very expensive to operate, and they’re having to buy a bunch of GPUs to scale up.

With such a stark price difference, I think this will create some interesting challenges for Salesforce’s Agentforce, which doesn’t have transparent, pass-through pricing for LLM usage. I have to imagine that GPT-4.5 either won’t be available (except maybe via a BYOM arrangement where you’re paying for the model) or Salesforce is going to have to add in tiered pricing based upon model power. We’ll see … maybe next week at TDX?

Meanwhile, Anthropic released its Claude 3.7 Sonnet model, which is a hybrid full-reasoning / some-reasoning / no-reasoning model where you control how much reasoning it will do. This is a very interesting approach as it lets you dial-up or -down the amount of effort the model will invest in solving your problem. I think this kind of control is going to be the way of the future.

Elsewhere, Google just announced “Gemini 2.0 is now available to everyone” while at the same time saying they’ve only released an “experimental version of Gemini 2.0 Pro”. My experience is that “experimental” models can change their behavior pretty dramatically from release to release, so I’m cautious about 2.0 pro for now.

I want to add a bit of my experiences with various models to the conversation. You can’t ever say absolutely that one model is better than another (at least of roughly comparable models; obviously GPT-4 is better than GPT-2). First, it’s not just the power of the model itself you have to compare, but the environment around it. A powerful car with a terrible transmission is a terrible car, no matter how many bhp the engine has.

For example, OpenAI vs. Google. OpenAI’s API and client libraries are reliable, relatively bug-free, and the service almost always responds with a consistent performance. Google, on the other hand, has a lot of beta apis and libraries with weird bugs and their service will periodically just decide it’s too busy for you right now. You can’t go a week before you have to start dealing with the Google’s I’m-having-the-vapors error and doing the “Feeling better yet, Googs?” retry logic loop. What does Google suggest to do about this (apart from waiting for it to feel better)? Buy dedicated, reserved AI capacity. Which is fine if you know precisely how much you need or you can afford to over commit. For the rest of us who just want on-demand AI services: ¯\_(ツ)_/¯

Copilots

There are so many copilots today that it’s starting to look like a major airline went out of business and its employees are all milling around Home Depot looking for day work. There’s …

The OG CoPilot, from GitHub that suddenly seems to be free, or maybe not

Google’s newish Gemini Code Assist which is also free, I think?

Anthropic now has a Claude Code, which seems to cost $0.10+ every time you ask it to do something.

Then you have non-LLM vendors building custom AI models and apps, for example:

Cursor from Anysphere (Free, $20/month, $40/month tiers), which is just Visual Studio Code re-skined and sprinkled with

powdered-sugarAI.Poolside, which I cannot figure out

Magic, which costs somewhere between $20/month and $450/month

A variety of home-brew, hope you have a big GPU, local-llama guys.

My experiences?

GitHub’s is like a bored dog that humps your sofa. If you have a stick, it will fetch. If you don’t, it’s annoying as hell. I have a macro key programed on my keyboard to turn it on when I have a stick in need of fetching and otherwise off. Still, it’s the one I use most often.

Google’s new copilot, when I asked it to do something simple with three lines of code, went away for a long while and rewrote my entire source code file. What?? UNDO! UNDO! UNDO!

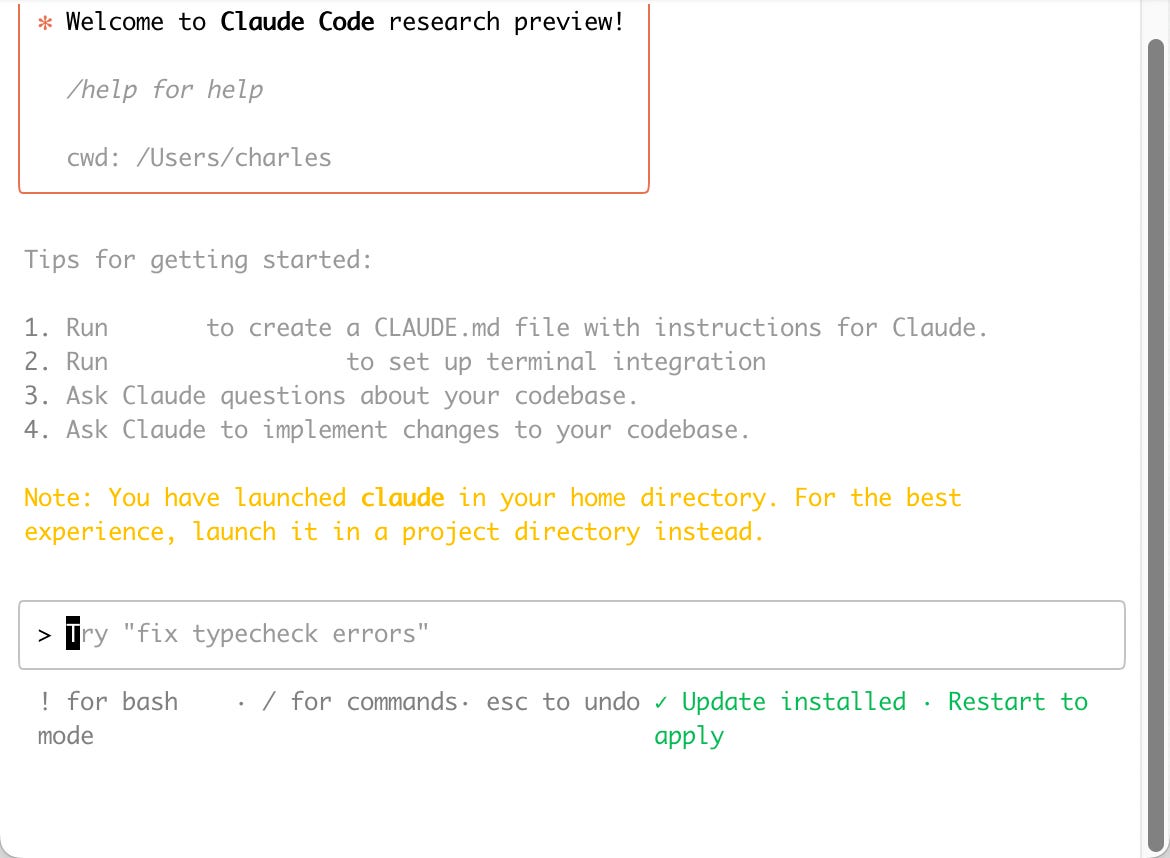

Anthropic’s Claude Code is like the days of Quick Basic all over again, with a “CHUI” instead of GUI:

In general, all of them are useful in carefully chosen situations. But in the wrong situation they can cause damage. I recently asked GitHub’s to organize my python imports (which can get messy in a complicated program). It neatly reorganized them … and then deleted a couple of them for no good reason and broke my code. Sigh.

That’s the problem with Copilots: the threshold to be “good enough” to use all the time is really very high, and probably higher than they are right now.

Which is not to say that AI isn’t incredibly useful in developing code. I just find it vastly better to develop and refine code snippets in the normal chat interface rather than in the IDE. That gives me a clear firewall between AI and the code that I control, and it often takes several rounds of refinement before I have code I want to try.

NVIDIA vs. DeepSeek

NVIDIA’s stock recovered most of its value from the DeepSeek scare:

But a lowering tide drops all boats (to turn a phrase on its head), so the recovery was short-lived (α and β are harsh taskmasters). Still, I don’t think anyone is still saying the DeepSeek means that nobody needs a lot of GPUs. Especially OpenAI, who claims to be hoovering up GPUs for 4.5. I don’t care what DeepSeek says, you’re never going to be able to run a top-tier model on your graphing TI calculator from high-school.

Salesforce Jumps on Google

I’m not surprised, there was talk of this two years ago when I was still at Salesforce. Google’s one-stop shopping: networking, storage, compute, and AI. AWS was supposed to fill this role, but AWS’s AI offerings are lagging the industry. Conversely, Google keeps coming up with nifty gizmos — Collab, Notebooklm, etc.. Everyone wants to hang out with the cool(er) kids.

Does this mean Salesforce is going to ditch OpenAI? Probably not, at least right away. Here’s the challenge in doing that: if you switch out models from under Agentforce, there’s going to be an immense amount of breakage. That’s why there’s still a lot of GPT-3.5 usage in there, it’s just too painful to switch unless you’re looking to do a rewrite anyway. Still, at some point net new customers are going to get Gemini instead, I suspect. Especially if the alternative is paying for GPT-Birken.

Perhaps Google is going to buy Salesforce? That seems unlikely to me, although not very unlikely a few years down the road.

AI’s Trough of Disillusionment / Peak of Irrational Exuberance

So many people on social media saying that AI is useless, a threat, etc. It seems to me it’s mostly people coming up with inappropriate uses of AI and then being disappointed (or relieved, I guess) when it doesn’t work, blaming AI. AI has become the new weather: something everybody likes to complain about but can do nothing to change.

On the other hand, Anthropic is on the cusp of another $3.5 billion in funding, OpenAI is looking for a fresh cash infusion (all those Birkins for GPT-5) and funding as a whole seems to be continuing madly.

I’m at a loss to know what to make of all of it, other than it’s going to be interesting!

How many of your readers know who Jane Birkin was or why Birkin is a synonym for ‘expensive’