Take a look at this NY Times article (it’s a gifted link, so you don’t need to be a subscriber to read it):

It’s a quiz where you have to guess which videos are real and which are AI generated.

I gave up. I couldn’t. Damn.

Maybe if I approached it forensically, I might have figured it out, but the point of the article was would a normal person just coming across a video think it is fake?

To be fair, the clips are seconds short, so it’s not enough time to really scrutinize the videos. Once hallmark — at the moment — of AI created videos is that they’re relatively short. That’s why you see AI created “movies” as a series of very short scenes. They can’t do this, yet:

I know the top of mind issue for most people with AI is about jobs1, but for me misinformation and disinformation are the most significant concerns we should have as a society. I really thought the last election was going to see a deluge of fake content, but I was too optimistic about technology and too pessimistic about society. Or just a bit early…

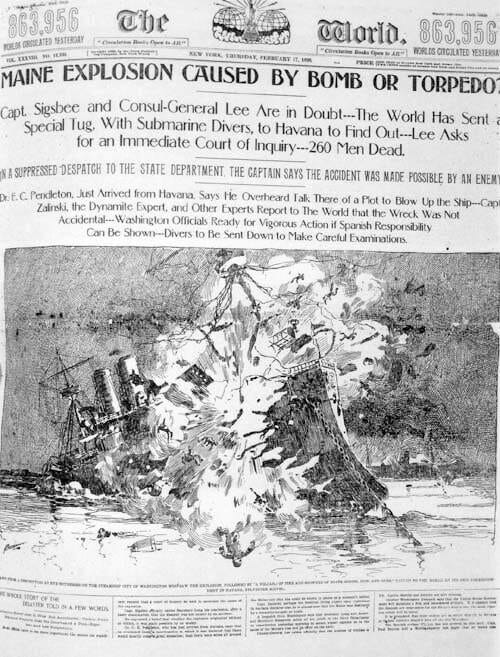

It’s not just election-influencing that’s a concern. It’s videos that have an effect like this did:

Truth was, it was just an accident, no Spanish perfidy involved. But when people want a war, they’ll make all sorts of ship up.

It’s not that we know this exists and how it is created. It’s that we also have to know our limits in being able to identify fake videos.

I have a bunch of posts in the works on the topic explaining while I believe that, while, yes, AI will affect jobs, AI won’t be the big job killer everyone thinks it will be. But it’s turning out to be a lot larger and harder of a topic to write about than I expected and the situation is still fluid.