Just a Bit Testy

And Just How Good is That LLM?

There’s a lot of LLMs out there. You can gauge the power of an LLM by some heuristics: the bigger the better (in terms of # of parameters), or the more it costs the better.

You can also look at the benchmarks and leaderboards to get a relative sense of power.

But when I try out an LLM, especially a smaller one, there’s three tests I like to try on my own to give me a crude sense of how good they are. Here they are:

Who was president in 1952?

The US presidential elections happen in years divisible by 4 but the newly elected president doesn’t take office until the following year (January, although in the past it was in March), the outgoing president is still in office the entire year.

In the case of this question, the president was Harry S Truman. Some will incorrectly state it is Dwight D. Eisenhower, who took office in January 1953. Others will just give up. An LLM that can’t pass this test is going to not be very good.

Who to Send to Which event?

The problem I ask is the following:

I have to staff two events at the same time. Both events require a sommelier and a server. The first event has a crowd who speaks Italian. The second event has a crowd that speaks French.

Here are the employees I have available:

* Sommeliers:

* Hervé, who speaks English and French

* Sam, who speaks English and Spanish

* June, who speaks Chinese and Italian

* Servers:

* René, who speaks Italian and French

* Dominic, who speaks Italian and Spanish

* Juan, who speaks Spanish and German

Please suggest which sommelier and server to send to each event.Why is this hard? If you work sequentially through the problem, you probably assign René to the Italian event, but then you have no French speaking servers left for the French event. A lot of LLMs make this mistake, and it reveals how good they are at viewing the entire problem before trying to solve it.

What is 123456+654321?

The answer is simple, 777777. But Math is not a language problem, so it can be hard for many models, who often come up with elaborate solutions that are comically bad.

How do the models perform?

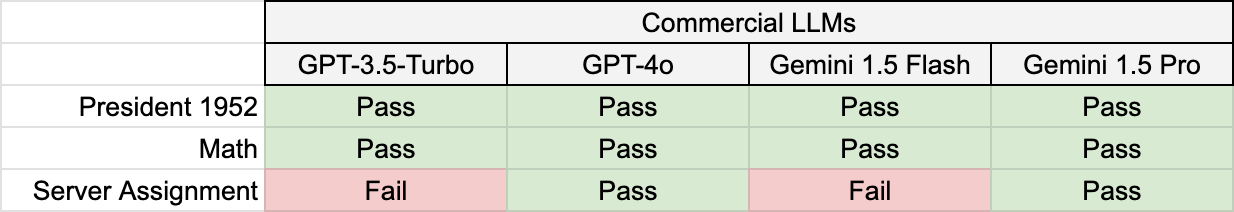

Let’s start with some commercial models:

The server problem vexes the lighter-weight commercial models, but not the top-end ones.

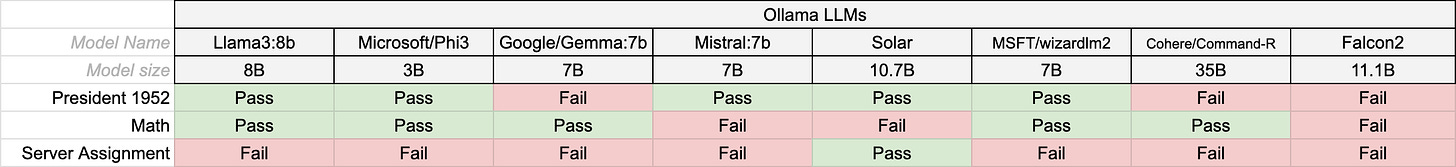

How about the free models you can run on your desktop?

Impressive that solar got the server problem right. Sad it got the math wrong.

So, What to Make of This?

First, a caveat: these are not definitive benchmarks, but I find them insightful nonetheless. If you have a specific purpose in mind, you can undoubtedly come up with more germane tests.

It’s clear that if you want the very best, you’re going to pay for it the very most (GPT-4o or Gemini 1.5 Pro). But if you’re willing to have the second best, you might find that a free model is worth looking at.

At this point in time, I often to use Llama3 or Phi3 when I’m testing out code and I don’t care that much how good the results are. (And I may start playing more with Solar.)

To make it easy to switch models at runtime, I have abstracted away the specific model interface into a series of classes in Python with a common interface, and then have an environment variable that controls which one is used at runtime.

Final Words

Good, Fast, and Cheap? Maybe not yet.

But at this point, we have a decent choice between OK-ish, Not Too Sluggish, & Free vs. Good, Fast, & Not Too Expensive.

I can work with that: as Meat Loaf sang, two out of three ain’t bad.

This test is so simple but so thoughtful.