In my last post, I posited that AI is going to be good, fast, and free in the (near) future. Why do I think that? Over the next posts I’m going to look at each of these (good/fast/free), see where we are, and try to project into the future.

This post starts with “free”. Let me start with what “free” means to me in this context.1

Free means you’ll be able to run LLMs on “normal” computers that you use for other purposes. You still have to buy them, pay for electricity, etc. But the cost will be so low and so buried that you won’t notice. You will be able to run purpose-built LLMS directly on your phone.2

You can already run LLMs on your laptop/desktop today, and they can produce results that, a year ago, would have impressed you.3 Don’t believe me? Let me prove it to you, right now, right here. This will only take a couple of minutes and be simpler than you think.

First, go to https://ollama.com and download it.4

You’ll find the documentation here, if you need it: https://github.com/ollama/ollama/blob/main/README.mdOpen the downloaded app to install it. On the Mac, it moves itself into the Applications folder, creates a login item, and installs command line tools (requiring permission). Don’t bother with running llama2 like it suggests: there’s newer and better we’ll use.

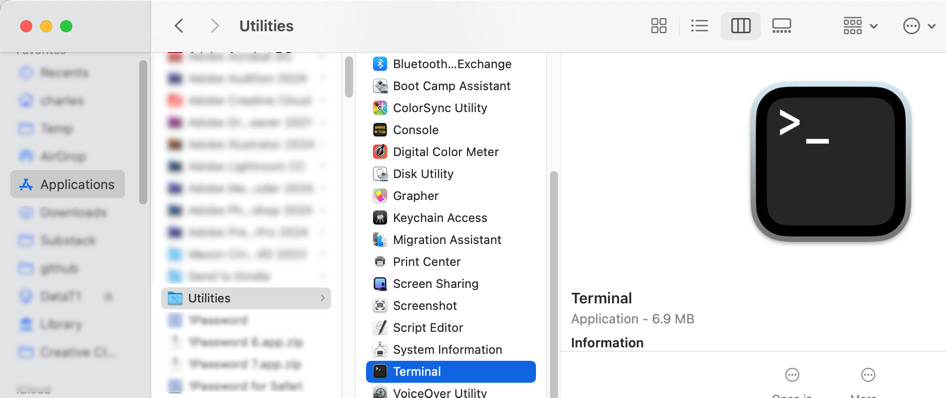

When it’s installed, open a shell, terminal, whatever your OS calls it. On the Mac, it’s a bit buried in Applications: 5

Now, issue the following command:

ollama run phi3. That starts the download of Microsoft’s latest Phi3 model.

When it’s done it will drop you in a chat session. The next time you run it, it won’t do the download again:

And there you are, running an AI LLM on your local computer, one that is pretty much as good as ChatGPT was a year ago (GPT3).

I picked Phi3 for this exercise as it’s small but decent. You can see the full range of models available to you here:

https://github.com/ollama/ollama/blob/main/README.md#model-library

So What?

It might seem like it’s not that impressive, running an LLM on your desktop when there are all sorts of free services (like ChatGPT 3.5) available for you that are faster and better. So why get excited over running a local copy?

I’ll give you two analogies to ponder.

First analogy: the electric motor.

I know, that seems like an odd choice! But when electric motors first came out, they were massive and expensive. You couldn’t just stick them anywhere you wanted. So what did you do in your factory when you needed power all over the place? This:

Yeah, you created shafts and belts to distribute power from a central motor.6

Then motors got so cheap that you can put them anywhere you need them. Think about it: wow many motors do you have in your house today? Toothbrushes, hair dryers, razors, power tools, kitchen appliances (including refrigeration), garage door openers, HVAC, the little vibrating thingy in your phone, fans in computers, and on and on and on. They’re everywhere, and we don’t think twice about them.

Second analogy: Computers

Both of these have roughly the same computing power:

What a come-down, from data center to cheesy toy! But there are computers in everything, far more than electric motors — in fact, anything with a motor in it today probably has a microprocessor in it as well.

The Future: AI Everywhere

Now, imagine Furby with full speech recognition, a built-in LLM, and text-to-speech. It will happen, mark my words.7

That’s our dystopian hellscape waiting to happen: Whatever you do, don’t give Furby your wifi password. You’ve been warned.

On the plus side, the need for your smart home device (Amazon Echo, etc.) to send every utterance away to a data center for processing will go away. Amazon probably won’t give up on the snooping, but it should be easy within a year or two to build a cost-effective device that does most of the processing locally and is able protect your privacy. (Just don’t make it in a Furby form-factor, please?)

Yeah, but how about today, right now? Is there any real benefit we can get “for free”?

I’ll explore that a bit in my next newsletter as we’ll build some tools to take advantage of local AI.

It’s not “nothing left to lose”…

Maybe a future phone, but given the way AI cores are getting put into devices, not too far in the future.

We’re all jaded now.

If you’re a Linux person, congrats, you get to execute a shell script instead of just downloading a finished executable.

If you’re going to be doing any amount of work in the shell on the Mac, get the free and much better iTerm2.

This technology pre-dates electric motors, but it was definitely used with electric motors as well.

Also, I predict that the number of Furbys murdered will rise. Cold-blooded Furbycide. Odds are Furby won’t be governed by the three laws of robotics, though, so you’ll have to be sneaky about it. Don’t let AI-powered Billy Bass see you or it will warn Furby.