If you were watching the presentations from Dreamforce ‘23, you no doubt saw “Copilots” mentioned a lot, especially in terms of making them more extensible. The presentations didn’t go into detail about how that all worked, but it did show bits and pieces of connecting a prebuilt “skill” to a Copilot.

The demo walked through a scenario where a chat session is asked for tracking information about an order, but GPT doesn’t know the answer, having been trained on data frozen in 2021:

The demo shows adding a “skill” to the chatbot to allow it to look up tracking information:

Impressive! Magic, one might dare say!

But like with every other magic trick, there’s something you’re not seeing to make that work, and so let’s delve into what goes into building this sort of capability: functions1.

There’s a lot of functional information coming your way!

Chat without Functions

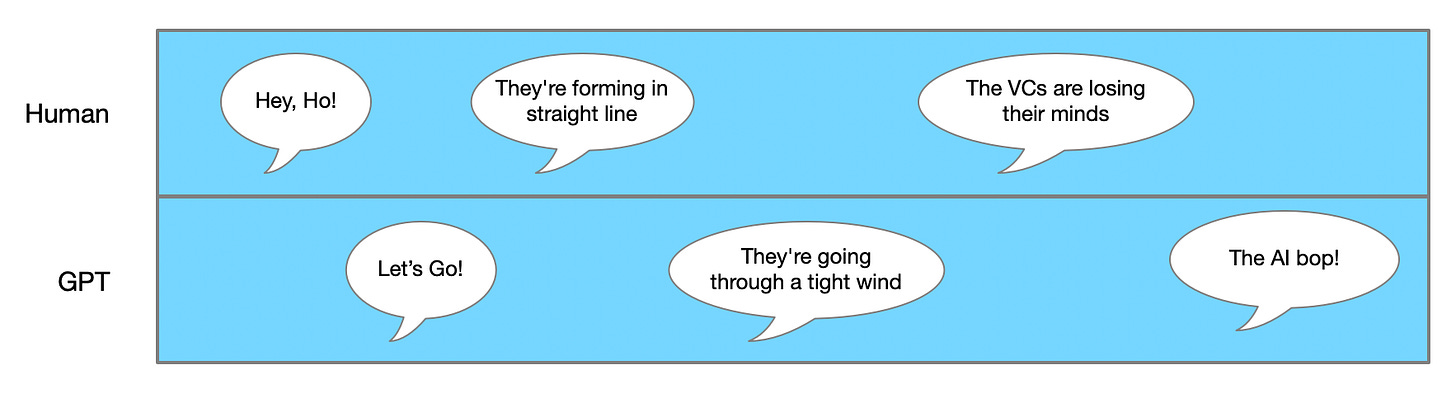

When you chat with an LLM (like, say, ChatGPT), the action just bounces back and forth:

Whatever the AI agent uses to respond to you is knowledge that was last updated in 2021. So a 1970’s Ramones lyrics? No problem. Something from Taylor Swift’s 2022 album Midnights? No dice2.

How do we turn GPT into a Swifty? Or have it able to converse on any other topic OpenAI couldn’t hoover up from the internet in 2021?

One way is to shove pertinent data into the prompt you send a priori. You’ll hear Salesforce talk about this as “data grounding”. But there are some pretty harsh limits on grounding. First, there’s a limit on how much data you can send in, generally about 16k. That’s a lot, but not so much that you can dump a CRM system’s worth of data into it. Second, the more data you send, the more sluggish it gets3. Third, the more data you send, the more it costs. And, finally, it may not be easy to know what the right data is to send in with your prompt, so you could end up wasting time, money, and effort and still miss the mark.

That’s not to say this approach doesn’t work. If the context of the chat is constrained and you can reasonably and efficiently gather the data the user needs, it can make the chat agent seem omniscient.

But when it doesn’t work, you change your focus: instead of trying to anticipate all the data the agent will need and retrieve it in advance, you try to anticipate the types of data (or, might we say, metadata4) the AI agent will need and let the agent ask for more data when it needs it. Instead of push, pull.

Chat with Functions

Functions are a very simple mechanism, but they do work in a fashion that’s a bit counter to what your intuition might suggest. Let’s consider a very simple usage, where you want your chat agent to be able to tell you the current weather:

Here, the user asks for the weather in Boston. GPT doesn’t know that, but we’ve told it that there’s a function that can be called to retrieve the weather. So the agent calls the function, which perhaps goes out to the US National Weather Service (who provides a free API for weather information!), retrieves the data, and it bubbles back to the user.

Did the chat agent know the weather? No. It had to look it up.

Did it know it could look it up? Yes it did.

How did it know it could? We told it before hand.

How does it call the function? It doesn’t, you do.

Wait, wut? I have to call the function, not GPT?

Well, gpt-3.5-turbo (or whatever you’re using) doesn’t want to call APIs on its own. In fact, it won’t. Nuh-uh, not gonna do it, no matter how much you try to sweet talk it.

So how does it “call” an API then?

When you call GPT with the user’s question, and GPT decides that an API needs to be called, it returns a particular type of response that says “call this function with these parameters and tell me what it returns.” It’s like some sort of bad rom-com, where the meet-cute couple is having an argument and talk to each other through their mutual friend, probably played by Awkwafina. “Tell him to go look up the weather in Boston” she yells at Awkwafina, who then repeats the question to man. “Tell her it’s 21°C,” he replies to Awkwafina5, who then gives the answer to the woman.

Wait, who’s Awkwafina in this scenario and why is she in the middle of your relationship with GPT? She’s your code, the chat agent you wrote that calls gpt. GPT tells it to call the weather service, your code does what it was told, and then returns the data to GPT who it can finish answering the user’s question.

Probably the best way to explain this is through code, and so in the next post we’ll walk through a simple, function-enabled, chat agent to follow dialog.

I so wanted to headline this post as “Conjunction Junction, what's your function?”, but I couldn’t make it work. There are not a lot of songs with the topic of Functions that aren’t horrible. Some were math teachers (good for them). Some I just knew not to click on. Oh, for a Tom Lehrer in our day and age:

(Strictly speaking, Tom’s is still with us, but at age 95 I think he’s slowed down a bit.

To be fair to GPT, I couldn’t answer questions about Midnights either. We’re not talking about my generation. Have another Tom Lehrer song.

And a lot of data = a lot of wait.

There’s Chekhov’s gun again, right here in act 1 of the function play.

Either this fictional movie was made in Canada or he’s just being a jerk. Although I suspect Awkwafina could do the conversion in her head, so perhaps she replied to the woman that it’s 70°F in Boston, making Awkwafina a true ETL tool.