Our first ChatGPT project will start with an example from the life insurance industry and have GPT recommend a type of policy based upon the customer’s stated needs. This is a great example to start with, it demos well, the mechanics of it are very simple, and yet there’s a world of nuance in what it does and how to tune it.

Week one of this newsletter (see part 1 and part 2 if you’ve missed them), was a warm up, a chance to gently get Salesforce and OpenAI talking. Now let’s do something impressive, and channel our inner Ned Ryerson!

→ Did someone share this post with you? Why not subscribe?

Get it Running

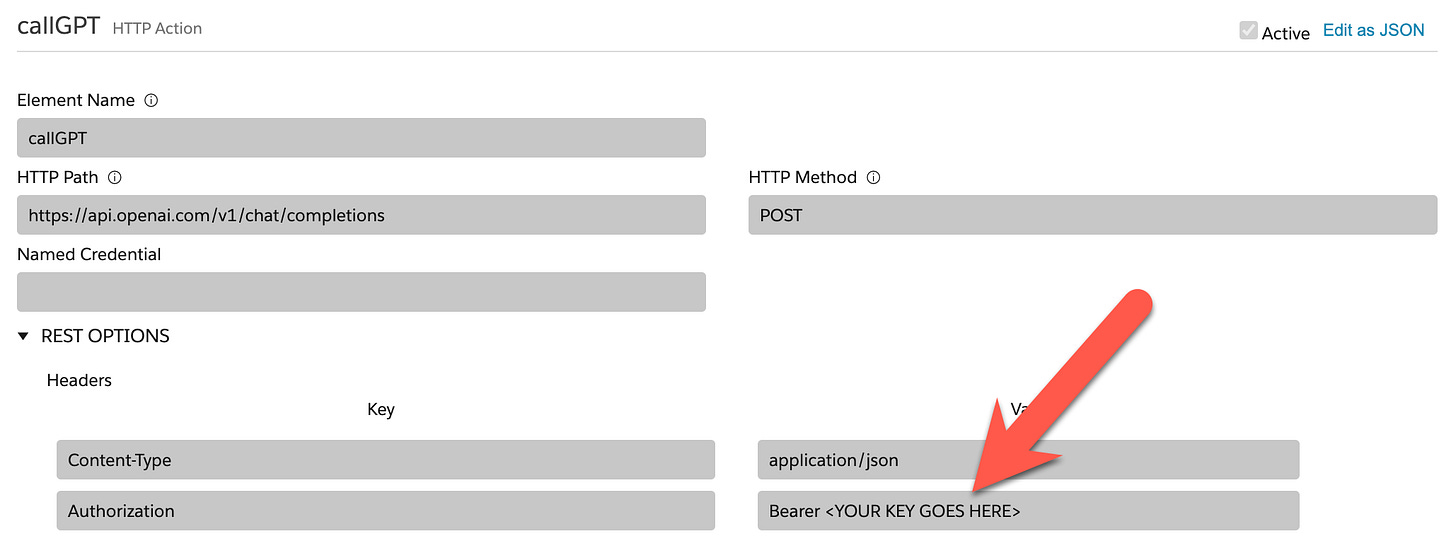

For this project, I’m not going to ask you build everything step by step like we did last week. Instead, go to my GitHub repository1 for this project and either clone it or download it. In there is a file “Life Ins Recommender.json”, which is a DataPack containing both an Integration Procedure and an OmniScript. Import those into Salesforce, but do not activate them yet. Instead, edit the Integration Procedure, as the callGPT element will need to be updated with your OpenAI API key2 in the Authorization REST Header to work:

Make sure you activate the Integration Procedure when you’re done.

This is fundamentally the same as what we did last week. If you run into problems, make sure last week’s project works first as a debugging step.

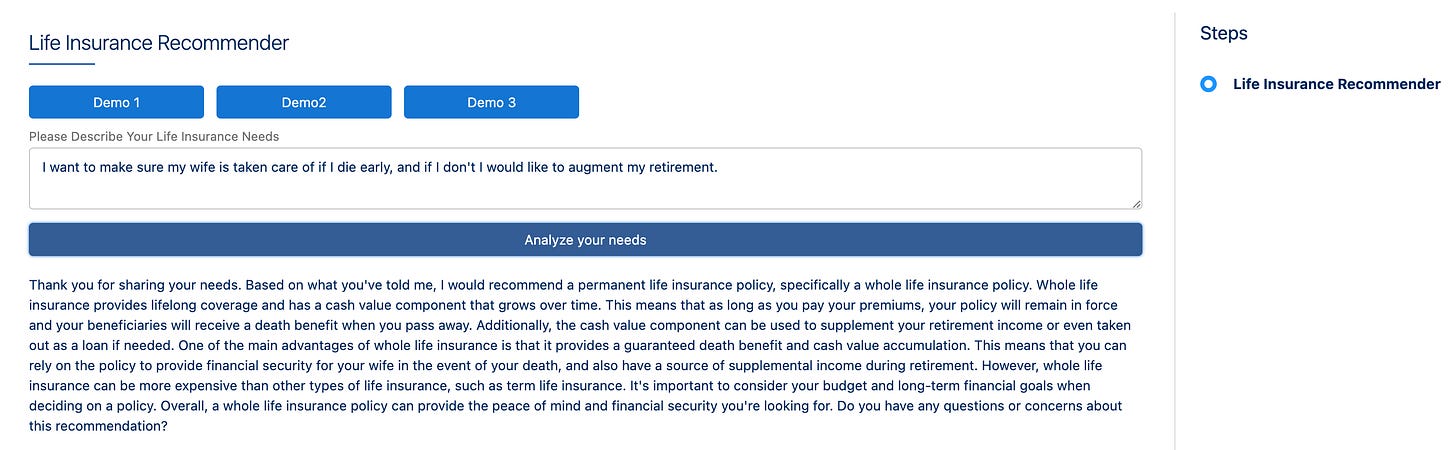

Now, when you open the OmniScript and go into Preview mode, you’ll see you have the option to enter a description of your insurance needs:

The three Demo buttons are there to save you from repetitively typing in various scenarios as you play with it, but you are free to enter any text you like in the “Please Describe …” box. You are able, of course, to change the buttons to anything you like or get rid of them.

Pressing “Analyze your needs” calls the Integration Procedure and awaits its response. And to be clear, there’s quite a bit of wait involved. I hope that this is just growing pains for OpenAI, but at the moment it seems like you can wait for 10 seconds before a response happens3.

Is That All There Is?

Yes, in that this is all that’s needed to do this simple demonstration.

No, in that there’s a lot more we can do. Make it for another industry? Yep! Have it drive workflow actions in Salesforce? With a bit of elbow grease. Make it a chatbot? Sure. Have it speak a different language? Warum nicht?

We’ll look at a lot of these kinds of questions in subsequent posts as we delve into the workings of our OmniScript and OpenAI’s ChatGPT.

How Does This Demo Work?

Let me quickly walk you through the mechanics of what’s happening here, starting with the OmniScript.

The first thing that happens is a SetValues at the top of the OmniScript. It sets the value of a variable called “thePrompt” with the instructions we send to ChatGPT to explain what its goal is. Playing with this can dramatically change what you get back out of GPT, and the work you invest in getting the response you want exactly what people call prompt engineering.

The demo buttons are trivial SetValues to preload the Text Area which has the insurance needs input.

The “Analyze your needs” button triggers the call to the Integration Procedure (and does a bit of fancy footwork to pass in just what’s needed). When the response comes back, it’s loaded into the Text Area for the user to read.

The Integration Procedure has three steps.

The first is a SetValues and it creates the structure we need to send to the API. You’ll see (if you edit the JSON of it) it does the following:

This specifies the model we want to use, the “temperature” (degree of randomness in the answers), the prompt (which gets passes as the “system” role), and what the user typed (the “user” role).

The callGPT element sends this to GPT over the Internet and collects the response. The Response Action extracts just the data we’re interested in (the response) and sends it back to the OmniScript.

That’s it!

Over the next few posts we’ll discuss all sorts of things about this simple demo and the ways you can make it better or different. There’s a lot to discuss, but get this working and have fun just playing with it!

Know somebody who might like this post? Send it to them!

FYI, the contents of the GitHub repository are covered by an MIT license, which means you are allowed to use them however you wish.

If you’ve used ChatGPT, you might not have noticed that it’s about as slow, but they give the impression of being faster by delivering the text in small incremental bursts. Their API supports this “streaming” delivery of text, to allow custom apps to mimic it. Unfortunately it’s far, far beyond the scope of a simple demo to build that capability into our OmniScript.

Awesome demo ! Its a simple one but does bring in the Chat GPT goodness into OmniScript!

Would it be possible for Chat GPT to ingest a set of products and recommend them based on the user inputs?