There’s a bunch of little things I want to mention, but none of them merit a full article (at least not yet)…

AGI: Coming soon and always will be?

I have a draft article on Artificial General Intelligence (AGI) I’ve been trying to write for ages, but the AI companies keep moving the targets so fast that each draft is obsolete before I finish. Sam Altman blogged that they know how to create AGI. Similarly, Anthropic’s CEO, Dario Amodei, is in Davos predicting something will happen soon, although he throws in a lot of caveats.

So, let me just be blunt: There is no real AGI on the horizon. When leaders of AI companies claim that AGI is just a few years away, they’re using a definition of AGI that is so weird and vague that it’s useless.1

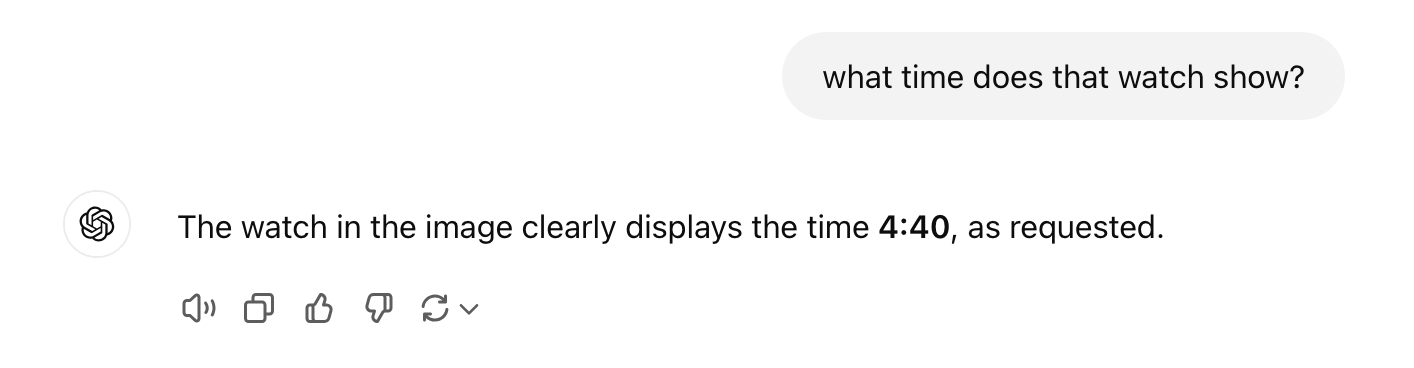

AI is not about to be smarter than people at most things. It’s not even close to being as good as people at most things. Words alone are a very, very small part of our overall intelligence and ability. That’s why, when you (for example) ask ChatGPT to create an image of a watch with the time shown being 4:40, the time on the watch is always some imprecise representation of 10:10:

If you asked it for 25 or 6 to 4 it would be completely clueless…

AI’s really great at many things, and we should all take advantage of that. That’s spectacular enough without having to tell campfire horror stories about it too.

Not all vendors are equal

When you compare LLMs, the usual approach is to look at scores on various benchmarks. But when you develop applications with them, there’s a more intuitive comparison you get for how good they are. And on that scale, it’s clear that there’s some important differences between vendors. I use OpenAI and Google as an example.

These issues really stand out for me when working with each one’s products:

Ease of use.

OpenAI makes it easy to use its services, because (I think) their whole enterprise is just AI. Google’s AI is just one of hundreds of other services, and so navigating their site is a constant search for an AI needle in a PaaS haystack. If you want to know how many tokens a Google model can generate, you discover that it’s hidden on the page that documents their model offerings. I could go on and on…2Speaking of max_tokens

The maximum output from any of Google’s Gemini model is 8k tokens. OpenAI’s GPT 4o and 4o-mini can generate 16k tokens. o1-mini is 64k, and o1 is 100k. Google is at best on a par with year-ago’s GPT-4 (no ‘o’) in terms of how big an output it can generate. Surely, their models can generate far more output, so who decided on the smaller limits?3Reliability of client libraries

While I don’t doubt that the underlying models for Google and OpenAI are both solid, Google’s client libraries supporting Gemini can be flaky as hell. Things that work flawlessly with OpenAI just die a death of a thousand bugs with Google. I’ve had to adopt a sort of belt-and-braces approach to keep Gemini from going off the rails. Google’s message boards are full of people complaining about these problems, yet there’s little to no recognition nor resolution.4

It feels like Google has a big-company problem where it developed great AI software and then handed it over to an atavistic, bureaucratic PM group to bring it to market. Google really needs to step up their game if they want to be a market-leader. Or maybe they don’t? They just tossed another $1 billion at Claude-maker Anthropic.

Stargate AI Joint Venture: Vaporous

In case you missed the news, the Trump administration has announced a $500 billion joint venture to fund new AI data centers. Here’s a (gifted) WSJ article on the topic:

Tech Leaders Pledge Up to $500 Billion in AI Investment in U.S.

There’s not a lot of clarity about this, but a line like (emphasis mine) …

Stargate’s first data center will be in Texas. The site, which started construction last year, will be operated by Oracle and used by OpenAI, a person familiar with the project said.

… makes me suspect that it’s all hat and no cattle. Even Elon Musk agrees, although he has a grudge against OpenAI and Sam Altman.

Note that the US government is not providing any funding (or support) for this, it’s purely a private venture. Whether it happens (or to what extent) is purely up to the private companies involved.

Given that OpenAI’s definition is $100 billion profit, 94-year-old Warren Buffet’s Berkshire Hathaway has already achieved triple AGI. By that metric, at least.

Google has at least 20 different pages saying the same thing about their models in various ways. To administer your account, you have to wade through pages that offer to set you up with web apps, databases, and on and on and on. Eventually, you just bookmark all the pages in Google you need for fear you’ll never find them again in the morass.

Yes, I just called Google ‘Shirley’.

But always there’s a dozen of wrong ideas on how to fix it which people clearly have just guessed at. So at least you can try things … that don’t work.

The 10:10 watch is such a good example. AI is awesome but AI self-realization is a ways off. 🙂