In the last post, I talked about taking an older Slack translation app that had been built on pay services and revising it to use free ones. It turns out that the translation tool it delivers is doesn’t get upset by errors: the results of the call to the LLM are given to the user as-is, and humans are pretty fault-tolerant. “Laissez les bons temps rouler”, as they don’t say in France1.

But there are other apps we might like to build where we can’t be so forgiving; ones where we’re relying on getting the right data in the right format because the direct consumer of the AI is code, not people. Unfortunately, the free services we want to use are much more error-prone than the high-end paid-for one: Llama 8B has 1/20th of the parameters of GPT-3, and undoubtedly an even smaller percentage of the more modern GPT-3.5-Turbo or GPT-4o. It’s going to mess up much more often.

I built a test case to see what kind of errors we get and how we can recover from them. It’s a News Reader application that uses AI to surface stories the user most interested in. The AI is being used by the program to decide what to show, and is only indirectly presented to the user.

The source to the application is available here:

https://github.com/cmcguinness/newsreader

I’ll review the code in subsequent posts, but for now I want to just explain what it does and the lessons it taught me about free LLMs.

Let me start with a quick overview of the application and how it makes use of Free AI.

Overview of the Application

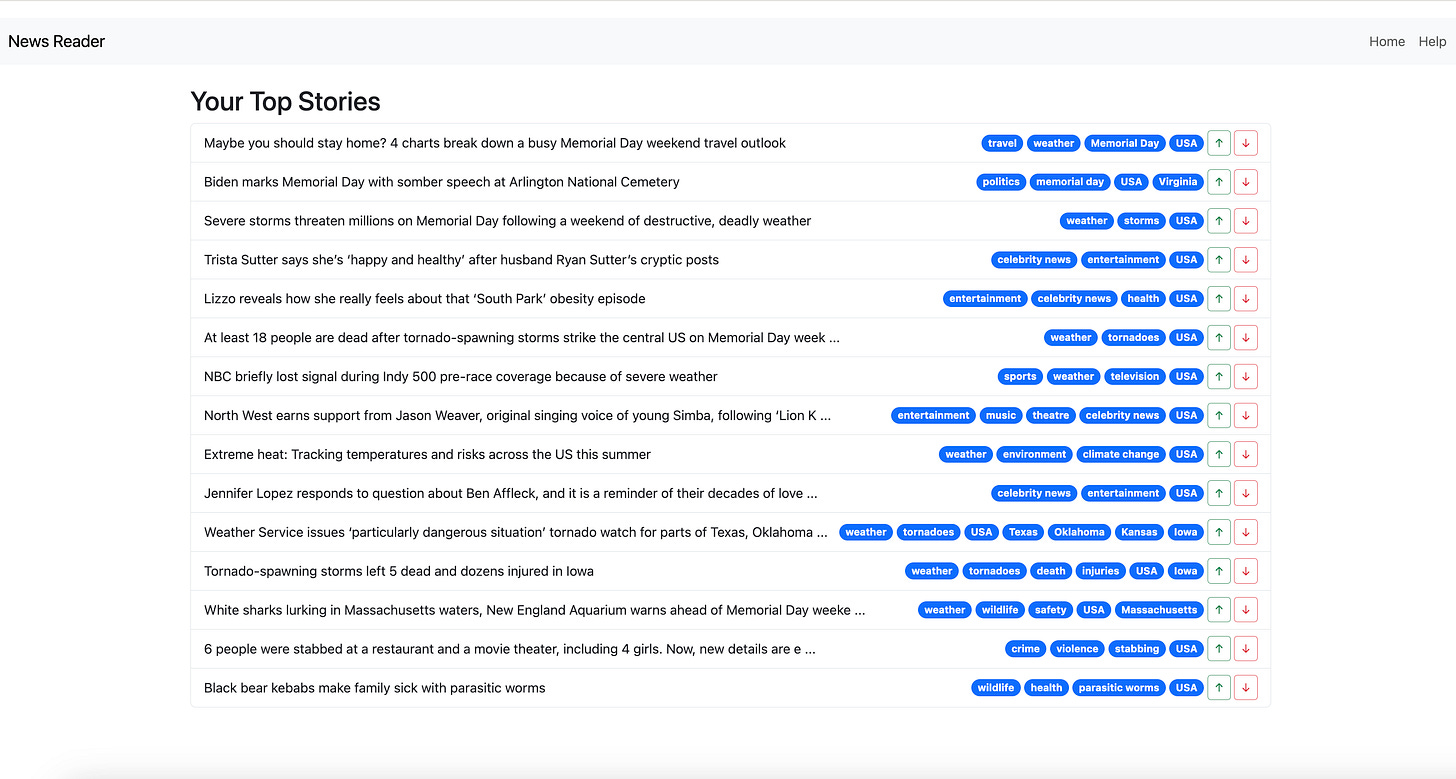

The application shows a list of recent news stories with the stories you’re most likely to be interested bubbled up to the top:

If you click through to read a story, its tags (those things in the blue ovals) all get a bit of a bump up in value. If you click the down arrow, its tags get knocked down a bit. Over time, the application learns which tags you like (and dislike) and can sort the stories appropriately.

To do this we need a few things:

A free source of news articles that we can access through an API or scraping

An free LLM that can generate tags for the articles

A means of tracking the user’s interactions with the stories to learn, over time, what they like and don’t like.

All the News that Scrapes!

There’s not a lot of APIs for retrieving news articles, and even fewer that are free to access. (Maybe even zero). However, many news organizations maintain text-only websites for people with slow connections that are just about as good. The one I used is CNN Lite website, which displays headlines in a super-easy to scrape format:

No ads, no autoplay videos, just the news. It’s actually kind of amazing.

The application will fetch the main CNN lite page, find all the links, discard the few that aren’t news stories, and generate a list of headlines and URLs.

Note that I don’t fetch each of the articles as well. One might, but the headlines are enough for the current task.

Put it to the LLM

We feed the headlines to our LLM with a prompt that asks it to generate tags for each headline. The pluses of doing this are that the headlines are compact and easy for the LLM to digest. The minuses are that sometimes the headline is too vague and the LLM generates bad tags. But that’s a kind of error that’s not very disruptive.

Tracking the User’s Likes and Dislikes

As we see more and more articles go by, we’re able to build a database of tags. As the user reads, likes, and dislikes articles, the scores for its tags are adjusted up or down. We compute an overall score for each headline based upon the score of each of its tags, and then use that score to rank the articles.

Easy Peasy, right?

Some Challenges

It would be Easy Peasy if the LLMs all worked perfectly every time. But when you use free Small LLMs2, they demonstrate the rigorous precision of a herd of cats.3

Here are the kinds of errors I’ve seen:

Just no response at all or an error

Off-Topic Response – it just starts babbling about something unrelated for no particular reason

Answers question, but does not respond as directed (you ask for JSON, you get unformatted text, for example).

Bad answers: it gives you an answer, but not a correct one (hallucination, etc.)

Incomplete or Incorrect Results: You ask for JSON, but it generates strings with single quotes which causes your parser to throw an exception.

Why do these errors happen?

If you don’t get a response at all or an error, the odds are:

We’re rate limited

The server is down

There’s a coding or configuration error

We’ve exceeded context window size

If you get some sort of answer back, but not one that meets your needs, it may be you need a better prompt, especially if it’s wandering way off topic. But improving prompts results in diminishing returns, and you may find that no matter how hard you work on it you still aren’t getting the output you want. In that case, it may be more in the structure of how you’re using LLMs and not in the prompt itself.

If the answers are frequently wrong (but still “look good”), there are a few causes I’ve seen:

The request asks for something too complicated. The amount of “think time” you get from an LLM is roughly tied to the size of the output, so if you ask for a lot of work to generate a compact result you may not have gotten the LLM to “think” hard enough. That’s where the “let’s take it step-by-step” prompting style comes into play.

The request is too big: In the news reader application, if you ask a small LLM to generate tags for a single headline, it does a solid job. If you ask for 5 headlines at a time, it start to make mistakes. The best solution is to skinny down your request either by simplifying your prompts or reducing the number of things you want done in a single call.

When you’ve exhausted all these and still aren’t quite there yet, you have two last arrows in your quiver: .

Tolerance: Try to be able to handle “slightly” defective results. For example: You ask for JSON. Maybe the LLM makes a simple mistake, like using single quotes instead of double quotes. Or it leaves off a closing “]”. The JSON parser in your library isn’t going to cope with that. But if you have a very tolerant JSON parser4, the error can be remediated.

Retries — and not just trying the exact same thing over and over again. Here are some ideas of how your code could try to recover in realtime:

If your first call fails, sleep for a moment and send the request in again (in case of rate limit, server overload, or other weird glitches).

Increase the “temperature” temporarily to try to get a different response. Normally, I like a more deterministic response and use a low temperature, but if that fails, turn up the heat and see if that improves things.

Have an alternative system prompt just in case the particular data you’re sending in clashes in some way with the normal system prompt.

Reduce the complexity. For example, if we’re asking the LLM to generate tags for 5 headlines and it fails, drop to 4 at a time instead.

But be careful, if things still aren’t working after a few retries it’s time for the program to exit and ask the developer to fix the problem.

With small LLMs, you have to assume that any given response is going to be problematic and you need the ability to recover from errors. Our newsreader program probably would fail constantly if it had zero tolerance for errors.

And, while a gpt-3.5 level LLM is very unlikely to produce results with errors, it still does happen. There’s a value in playing with smaller, and more error-prone, LLMs in that you see problems at a much higher rate and so have to get good at dealing with them. To paraphrase John Curran5, the price of free is eternal vigilance.

But they do say in Louisiana.

Or SLMs, as Microsoft likes to call them now, setting up a future slapstick routine where somebody thinks we need to deploy Sea Launched Missiles, not Small Language Models

Which reminds me of one of the all-time best Super Bowl commercials, bringing a tired cliche to life:

Have, in the sense of you write one

Quite the guy, as it turns out: https://en.wikipedia.org/wiki/John_Philpot_Curran

Didn’t remember that commercial at all. Hilarious!