AI itself can be kind of dry and technical. But the people involved, oh my, they can be nasty, arrogant, aggressive, and spectacularly wrong. That’s to be expected, of course, people being people, but there’s one case where a high-school rivalry spilled over into AI research and ended up stalling AI’s advancement for decades. A case where a researcher who was in fact prophetic was nonetheless mocked and backstabbed by another who was wrong yet hailed as a leading light of computer science.

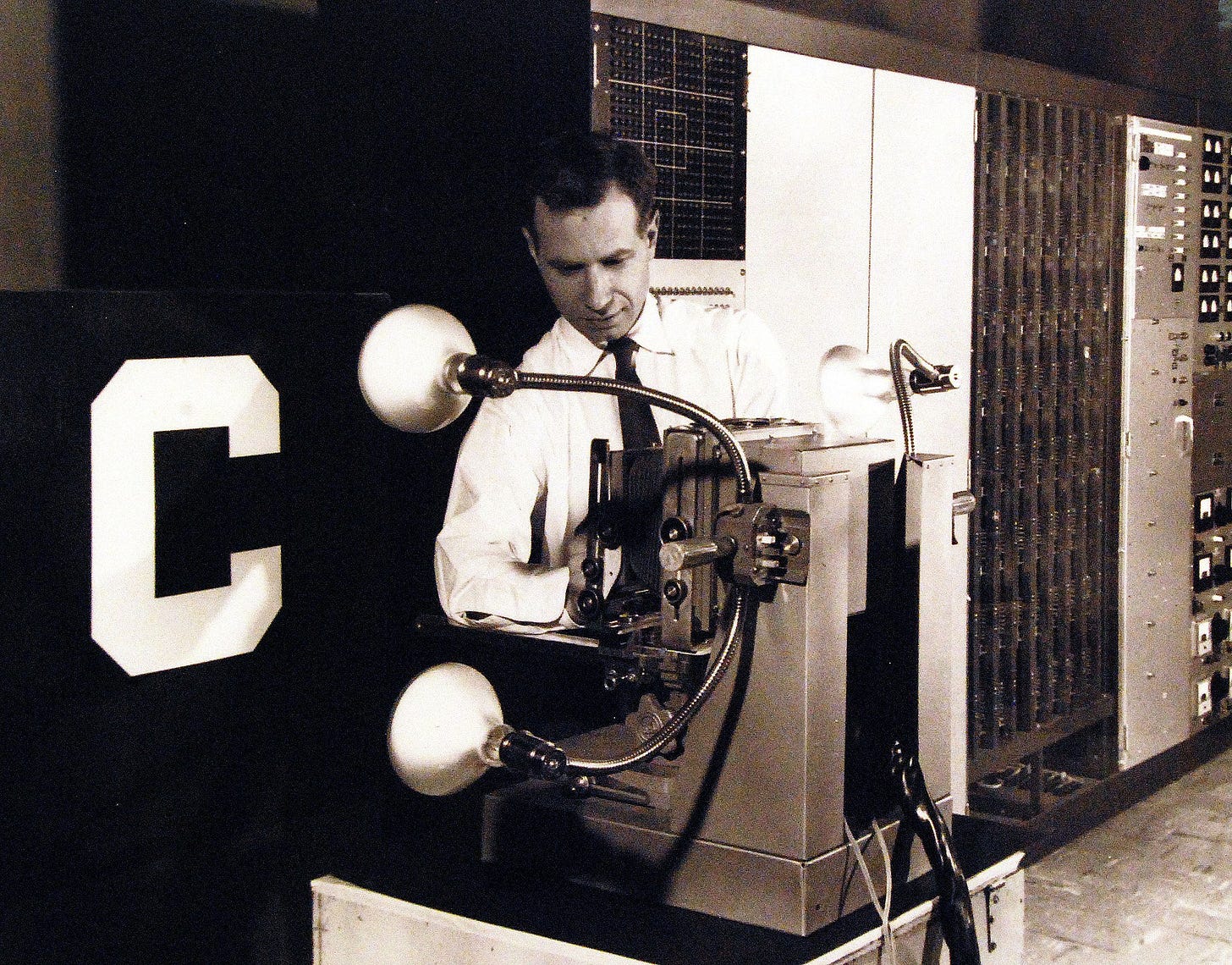

So who were these men, and what the heck happened? Perhaps the best place to start is with this article in The NY Times from July 8, 1958:

That, gentle reader, was the very first substantial neural network implementation, and it was invented by a research psychologist trying to understand intelligence. It is hard to overstate how influential it is: GPT-4 is basically just a few billion of these things lashed together.

In 1957, Frank Rosenblatt was a newly minted psychology Ph.D working as a researcher at Cornell, intrigued by intelligence and how it worked. He had a lot of wild theories, including that you could transfer learning from one rat to another by extracting RNA from the brain of one and injecting it into another. But he also had an idea that you could build electronic neurons to replicate human intelligence using an algorithm invented during the war years which had yet to be successfully implemented. His goal was to use the algorithm to build a system that could read and, ultimately think.

Imagine you’re in his place at that time. It’s the late 1950s and you want to create a system that can do optical character recognition (OCR). That’s not even a thing back then. How the heck are you going to do that?

You can’t just pick up a webcam at Radio Shack — in fact there aren’t any commercial digital sensors at all. So you have to cobble something together yourself. There are these things called photocells that have been around for about a hundred years and are still in wide use:

Basically, the more light you shine on it, the more electricity it lets through: a b&w pixel. You could definitely get these from Radio Shack in the 1950s. Take a bunch of them — let’s say 400 — and put them into 20x20 grid, focus an image on them … and you have the 1958’s best only digital camera with 400 mega kilo really big pixel resolution! Here’s a photo of the sensor:

It was part of a device to project letter forms onto the sensor:

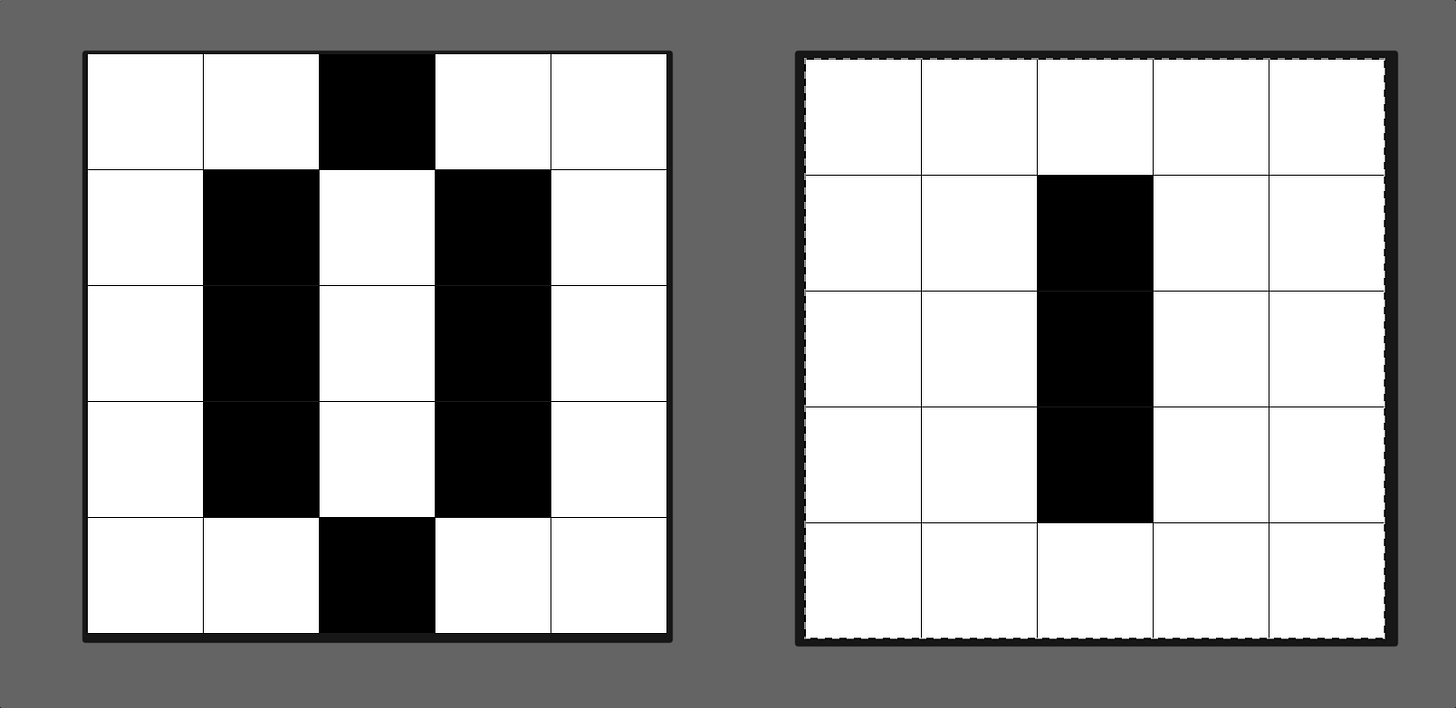

If you project a “C” like this:

Onto the sensor, it outputs something like:

Which is definitely good enough to recognize!

But how do we build AI to recognize this using 1958’s technology? There’s a bit of clue in the article above: Rosenblatt was using a $2,000,000 IBM 704 computer, which was quite good at math1. Neural Networks are just lots and lots of multiplications and additions, and this was in the 704’s sweet spot. Interestingly, Rosenblatt even looked to build a hardware version of the neural network:

He was ahead of the game on specialized neural network hardware as well!2

To explain how this all worked, let’s look at a simplified example, where we’re using a 5x5 sensor instead of Rosenblatt’s 20x20 sensor. For this example, we just want to tell 0 and 1 apart when we see them. Here’s an example of what 0 and 1 look like on our 5x5 sensor:

That definitely good enough to tell them apart.

These two images can be represented as vectors.3

0: [0 0 1 0 0 0 1 0 1 0 0 1 0 1 0 0 1 0 1 0 0 0 1 0 0]

1: [0 0 0 0 0 0 0 1 0 0 0 0 1 0 0 0 0 1 0 0 0 0 0 0 0]If you do a matrix multiply4 of either with …

Neural Net: [0 0 -1 0 0 0 -1 1 -1 0 0 -1 1 -1 0 0 -1 1 -1 0 0 0 -1 0 0]ᵀ… you’ll get a 0 when the sensor “sees” a zero and a 1 when it “sees” a one. (Again, this is a simplified version of what Rosenblatt did.)

In the end, his Perceptron was able to “read”, one letter at a time. Rosenblatt details the full design in a 1958 paper. Of course, the Navy went from “it can read letters slowly” right to “it will soon achieve consciousness” which really hasn’t happened. Keep that in mind when Sam Altman says AGI is right around the corner!

The [0 0 -1 0 0 0 … 0] above are the 25 parameters in my toy neural network. So when you hear a model like Llama 3.1 has 405 billion parameters, know that it’s the same thing, only a wee bit larger and a wee bit more complicated. (The source code to mine in a footnote5.)

So, here we are, it’s the early 1960s and Rosenblatt has invented both neural networks as well as specialized hardware for them. In an alternate reality, the emergence of integrated circuits around the same time could have significantly propelled AI research. ICs can be built with analog multiplication units using just a few transistors each, meaning that Moore’s law would have quickly enabled building large scale AI hardware … by the 1970s. (BTW, analog is good enough for AI.6) But instead of getting powerful AI, we got (checks notes) Pong. How come this alternate reality didn’t happen? Good question!

As Rosenblatt was making his breakthroughs, you might think that the boffins of Computer Science would have lofted him onto their shoulders and cried out for increased funding of his work.

And did they?

Oh hell no they did not.

Rosenblatt and another (very well known) Computer Scientist, Marvin Minsky, had gone to high school together, and clearly had something of a rivalry. Minsky had tried to build his own neural network in 1951, called (ironically) SNARC. And while he was somewhat impressed with his results, he ultimately decided that it wasn’t powerful enough to be worth pursuing further. Which, as it turns out, was only true because he made some bad design decisions. But his experience was enough to convince Minsky that nobody could build a useful neural network because, you know, he couldn’t.

And their rivalry caused Minsky to ridicule Rosenblatt’s work, demean it as flawed, and even to write a book with Seymour Papert that at best damned it with faint praise. Perhaps Minksy was upset over the grandiose claims made about the Perceptron. Regardless, because of Minsky’s backstabbing, Rosenblatt’s AI funding dried up, derailing the most productive avenue of AI research.

Conversely, Minsky’s approaches to AI were well funded for quite a long while until they eventually flamed out7. As a result, Computer Science, having first shunned the right approach, now had failed with the wrong one. As a result, wew funding for AI research became very hard to come by during the “AI Winter” that lasted to the late 1990s.

Frank Rosenblatt should have lived into the 2000s to see his early work become the foundation of modern AI and claim his fame, but tragically he died young in 1971 in a sailing accident.

Marvin Minsky, on the other hand, went on to live a long life, dying just 8 years ago, having won many awards, including, ironically, for early work on neural networks. That’s some ugly historical-revisionism about a guy who did his best to kill the Perceptron and neural networks.

Paul Rosenblatt’s memory is a blessing. Others’ memories are more a cautionary tale about pride, stubbornness, and selfishness.

It also had the first implementation of FORTRAN, although I cannot tell if Rosenblatt used it or hand wrote machine code.

Which is a task GPUs are designed to perform in parallel, which makes them incredibly fast and valuable for AI. Sorry, gamers.

It does not matter if 0 or 1 is used for white.

Multiply each pair of numbers (one from the image, one from the “neural network”) and then add them up.

Note that the name of the AI we are using is “Perceptron!”:

from sklearn.linear_model import Perceptron

import random

import numpy as np

zero = [0, 0, 1, 0, 0, 0, 1, 0, 1, 0, 0, 1, 0, 1, 0, 0, 1, 0, 1, 0, 0, 0, 1, 0, 0]

one = [0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 1, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 0]

def make_train():

x = []

Y = []

for i in range(100):

# randomly pick zero or one

if random.randint(0, 1) == 0:

x.append(one)

Y.append([1])

else:

x.append(zero)

Y.append([0])

return x, Y

percep = Perceptron()

x, Y = make_train()

percep.fit(x, np.ravel(Y,order='C'))

# Print the final weights (coefficients)

print("Final weights:", percep.coef_)

print(percep.predict([zero]))

print(percep.predict([one]))Like these people are doing today: https://mythic.ai

There was, frankly, a lot of self-indulgent crap.

Fascinating historical journey. I enjoyed it and I hope others do as well. Thank you!